One of my favorite places to build is at the intersection of hardware and software. My first professional software development job involved working on diagnostic software for K7 at AMD. What an incredible place and time for a college student! All these complex pieces fit together to build a working computer: software, a CPU, the northbridge, the southbridge, RAM, PCI cards, and so on.

When it all worked together, the system could do amazing things. But, on the other hand, if one of those pieces had a tiny bug, the whole system could fall apart.

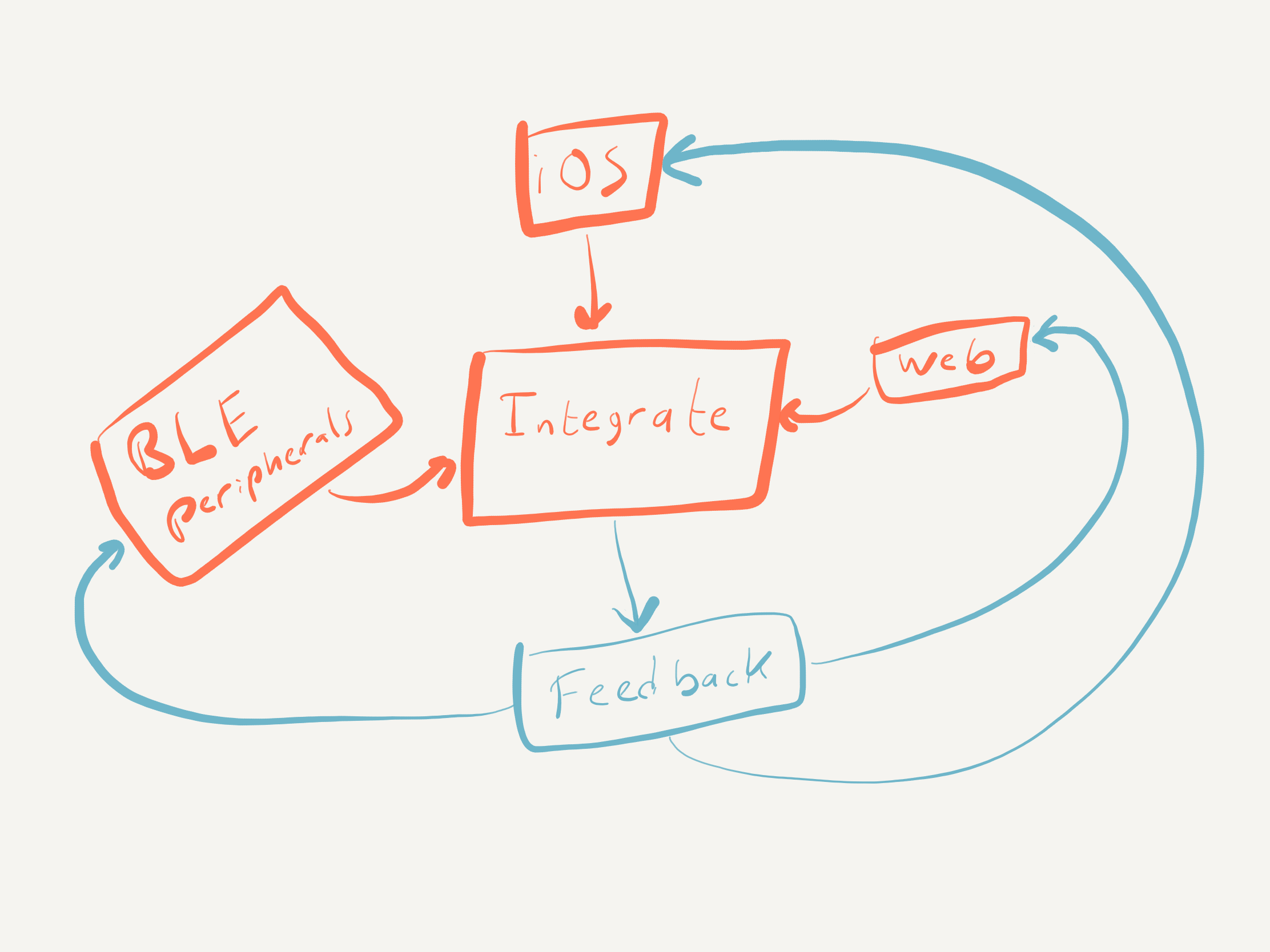

Today, our hardware systems are even more complex, even if they might fit in your pocket. For instance, they almost universally have constant connectivity, incredible computing power, sensors, displays, geolocation, and peripheral integration. With these capabilities, smartphones and tablets have become powerful platforms for interacting with and linking other products to the internet. So today, my interests have focused on building systems with iOS apps and Bluetooth Low Energy hardware.

Integrating multiple hardware and software platforms involves many challenges. Some difficulty comes through organizational structure and culture, and some arrive through inexperience. And without a doubt, technical complexity also plays a significant role.

These suggestions will help you maintain control over a complex software project by integrating feedback systems into the development process and system architecture. Building software, hardware, and firmware to work together always have unanticipated challenges. By preparing for difficulties in advance, you can detect and fix them much earlier in the development process.

DISCLAIMER

You're probably annoyed at the term "best practices" if you're like me. How the heck would I know what the best practices are? I don't. Apple could have flying drones hovering over the shoulders of all their engineers, politely offering suggestions as they write code at their unbelievably neat desks.

Perhaps a Google AI writes all their BLE code. Who knows the "best practices" and which organization has them? I'm simply writing a few tactics and strategies I've used to help keep projects on track. They work for me and the many projects I've worked on over the decades. They might work for you.

ABI -- Always Be Integrating

A = Always. B = Be. I = Integrating. That's my mantra, my big hardware/software strategy. It's a little like releasing a minimum viable product, except this isn't about your product/market fit; it's about your system. As Gall's law claims, complex systems that work evolve from simple working systems.

Integrating helps you learn how your system works and where it might fail while the system is still simple enough to debug easily.

Continuous integration also allows you to find issues with your system design while they're still cheap to fix. For instance, early integration found an issue with part of the peripheral hardware in one project. The hardware worked fine in all our directed tests but reliably failed when used with our iOS app for more than a few minutes. Ultimately we had to change the hardware design and peripheral firmware to use a different module.

If I had not performed early integration testing and lobbied to investigate the problem, we may have spent millions of dollars building firmware and final hardware on a shaky foundation. Massive hardware and firmware rework would have resulted, which could have killed the product.

Let us consider two alternatives to continuous integration: the Silo Farm and the Rotten Egg models.

Development in Isolation

A few large organizations I've worked with had a siloed approach to products. For example, one group builds module X. Another group works on the same product but only on module Y. The group leaders have regular meetings to monitor and discuss progress:

"How's the firmware going?"

"Oh, it's excellent! How's the software?"

"Oh, perfect!"

As far as anyone knows, they are executing the project plan flawlessly. Each team has directed tests bolstering their confidence. Without feedback from integration testing, the project appears on track.

Unfortunately, the integration of hardware peripherals always reveals surprises. Leaders lacking in hardware experience will feel like their team fooled them.

"How did this happen? Last week, everything looked beautiful. Today we're scrambling to figure out why the app is crashing, and the firmware drops the BLE connection every five minutes!"

This sort of "integration wall" seems predictable to engineers with experience working on hardware projects but holds a genuine surprise for folks with a more traditional software background. Project managers can spend entire careers on products where engineering can hedge integration risks with common frameworks and abundant computing power. But, on the other hand, BLE products face uncommon risks like battery life, limited computing power, and low-level bit-bashing.

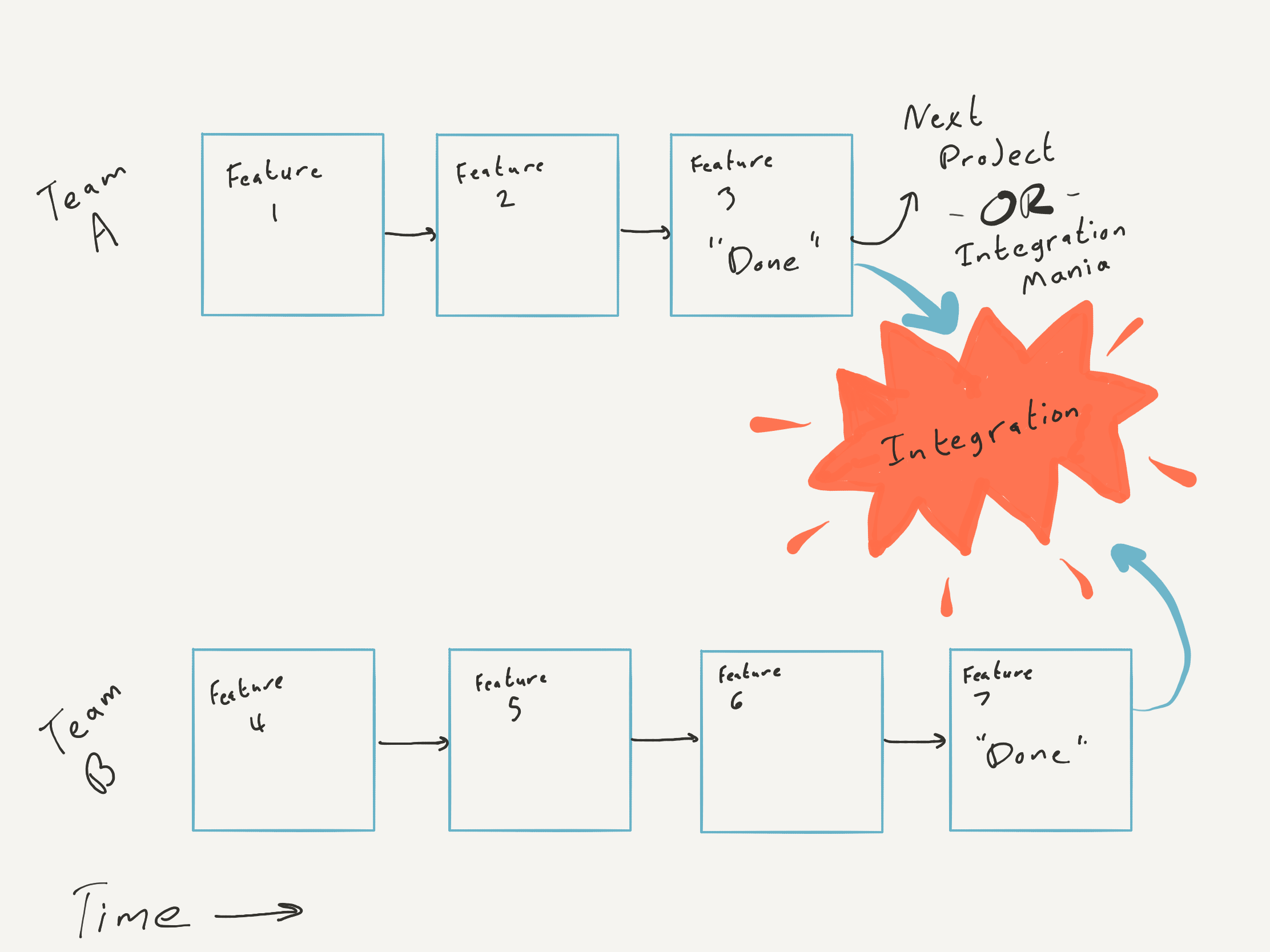

On Bluetooth projects, a siloed approach creates the illusion of fast progress while deferring the discovery of bugs, unknowns, and flawed assumptions to the project's final phase. And the later stages of a software project are when bugs cost more to fix. Not ideal!

Last One is the Rotten Egg

Another less-than-ideal outcome I've observed is the "rotten egg effect." When this happens, the last team to finish gets stuck with all the integration work. "The last team to be feature complete is a rotten egg!"

This trap trips when one side finishes its work and moves on to its next project before integration testing completes. Once the hapless "rotten egg" team starts integration, they'll face an uphill battle to get help from the "feature complete" teams, who must focus on their new project. Depending on the situation, one group may be left implementing one-sided workarounds or taking on technical debt to ship their product.

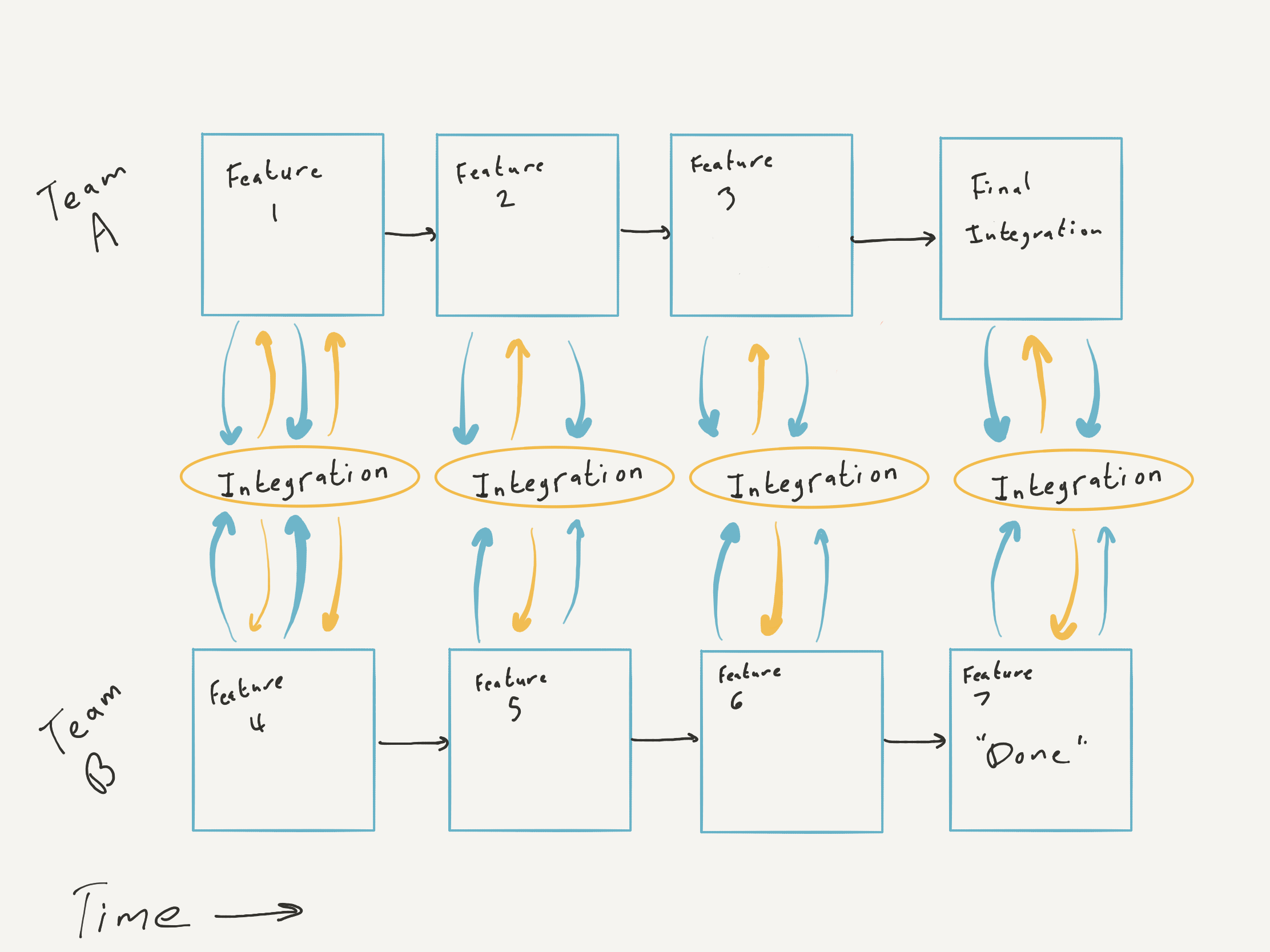

The Cure - Continuous System Integration

There, of course, are many methods to help prevent an integration apocalypse. Unit tests. Simulators. A shared test pattern library. These are great, yet physical reality is always the best simulator. The real world is always 100% accurate.

Think about a spider web. The spider doesn't cut hundreds of bits of silk to length and then assemble it on a tree. Lucky for arachnophobes, spiders don't have that kind of mental capacity. Instead, they run strands of silk from one branch to another, one at a time. Each strand has the correct length because the spider builds it on the tree—no need for calibrated rulers or theodolites. Reality is the best test.

Imagine building a railroad with two teams laying track intending on meeting in the middle. Then, of course, you can (and should) use GPS, maps, surveys, and probably many more techniques to ensure the rails line up. But by far, the most straightforward and least error-prone approach as you work is to look up from time to time and ensure that your tracks seem to converge.

Is it precise? No. But if the two sections of track obviously won't meet, nobody can dispute it. The same goes for systems. If your iPhone app and Bluetooth dongle can't talk to each other, it hardly matters what unit tests are passing or how many Jiras you moved to the end of the swim lane.

Regarding iOS apps and BLE devices, I like to start testing the app and the hardware together as early as possible. It doesn't matter if the hardware looks like a BLE demo kit wire-wrapped to a protoboard or if the only capabilities are connecting and echoing bytes back and forth. Once the hardware, app, and firmware teams have the hardware and software so that the entire system can work together, you have a baseline. Then, as parts of the system evolve, you can verify new features work and that nothing has regressed in functionality.

Software and Firmware Controls

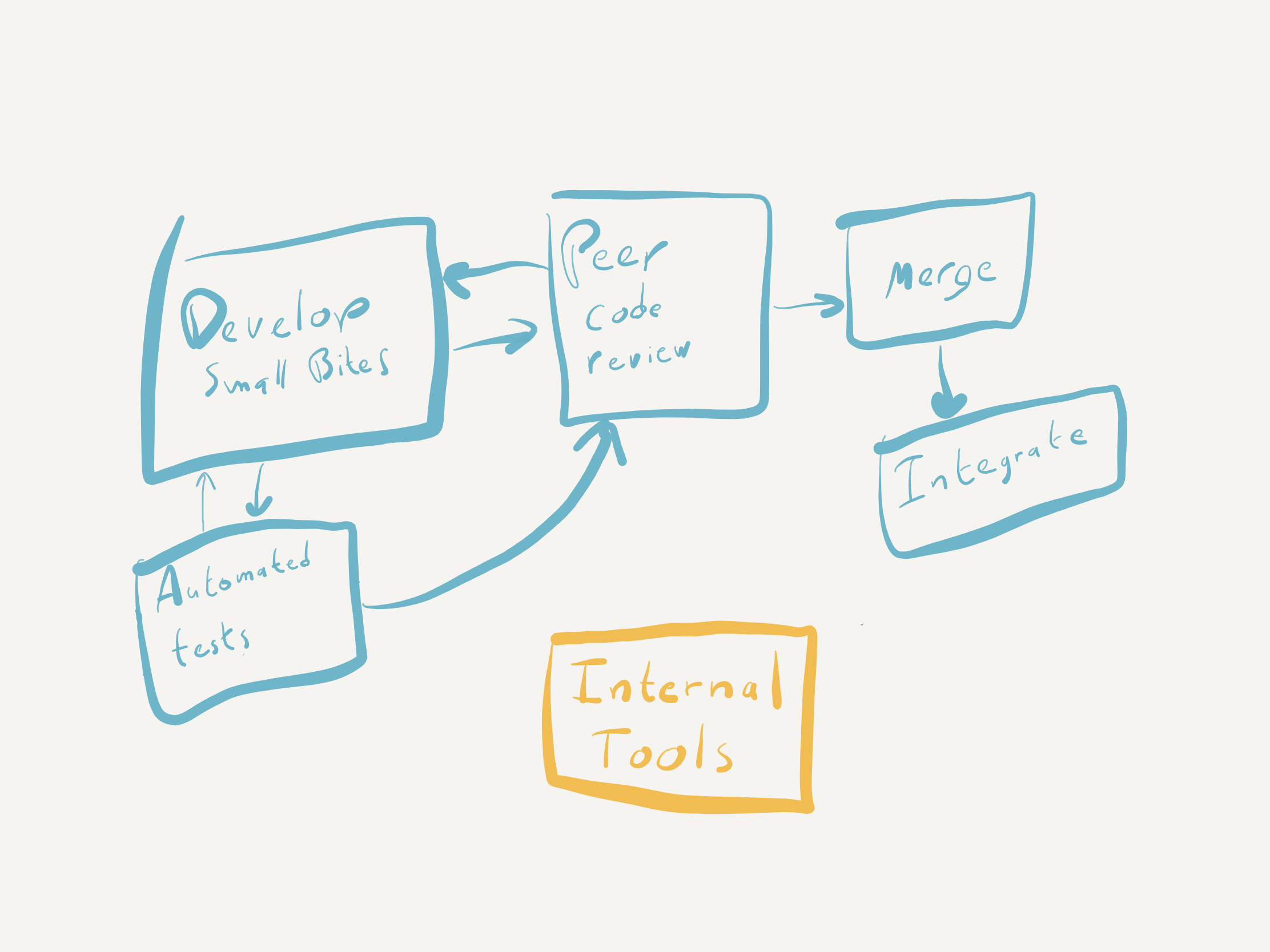

Despite what I've occasionally heard claimed, firmware and apps are both kinds of software. That means using the same controls any important software project would use. If anything, the constraints of firmware platforms demand more software discipline.

Of course, all software goes into distributed version control. The release branch gets locked down, so all changes go through peer code review (e.g., a pull request). I know that sometimes we hire mavericks, intelligent folks who think they don't need code review. Guess what? They still need a code review.

For similar reasons, you need a build server for all software. On top of providing test data on every commit, it should reject any PR with errors, warnings, linter issues, or failed automated tests. Likewise, a successfully merged PR should trigger a build of release candidates ready for integration testing.

You might worry that setting up a build server will cost you a day or two upfront and perhaps an hour a month of maintenance. I agree! The configuration will have an upfront and ongoing cost. However, an automated build and test will save hundreds of hours of pain and suffering. (And that's just counting any debate over indentation.)

Tooling is part of any good software strategy, but the benefits only compound when you start using these tools early in development. Wait too long to add automated tests, linting, and so on, and you're instead compounding technical debt. Not only will your team have to catch up on a pile of missed feedback, but they will also lose any opportunity to make tradeoffs of project scope against quality.

When you save fixing warnings or static analysis for the last week of the project, it's too late to cut a feature. Cutting features is work! So however many issues you find is the amount of work you have to do -- unless you're willing to compromise on quality. Delay the introduction of software controls, and you're simply opting to add technical debt.

Tiny bites

First, let me confess that I occasionally bite off more than a week's worth of work in a pull request. Sometimes more extensive changes are needed, and occasionally I'll misjudge the scope of a feature.

However, aim to merge each working branch back to the main one within a few days. Teams with long-lived feature branches end up in trouble. They spend days in merge / rebase hell and sometimes find that they accidentally regressed the codebase in a merging error. Massive changes also tend to impact the feature branches of other developers, resulting in excessive rework. The more contributors the code has, the more significant the effect of large changes.

Control over a software project requires small bits of work easily digested in peer code review and quickly deployed by an internal release.

Finally, on the topic of control, if you intend to have automated tests, write them alongside the features. Hiring a "unit test" person late in the project usually doesn't add much value. Either everyone writes tests now, or nobody writes tests.

Unit Tests and UI Tests require a bit of thought on how code gets written. In my opinion, writing tests later is just checking a box without really extracting many benefits. On the other hand, end-of-project automated testing can be under a lot of pressure to achieve a coverage goal without finding new bugs.

Many non-technical managers see automated tests as part of a quality system, which is fair. They're also part of a healthy development process. I've seen many teams struggle to reproduce and isolate a bug that would have taken a few minutes to find if they already had a testing framework up and running. It is generally a lot more work trying to figure out how to get hardware to emit a specific value than simply using automated tests to inject test values directly into the code paths of interest.

Logging and Internal Tools

Unlike the many apps which act as clients for web services, BLE apps often involve many threads of execution. In addition, Bluetooth peripherals operate asynchronously from the app. As a result, many of the more challenging bugs in BLE systems involve mistakes in asynchronous programming. For that reason and others, a robust logging system will help save your sanity.

Logs also help reconstruct a bug description that might be as vague as, "I connected to our BLE thermometer with the app, and it reported a temperature of 0 degrees. However, the reading made no sense because I stood in the parking lot where the temperature was closer to 104 degrees."

Having a log file might reveal that the calibration value for this thermometer was much higher than expected, and the app incorrectly calculated the calibrated value. Or perhaps the device had run out of storage space for temperature readings, and the buffer didn't wrap around correctly. The log provides the kind of context your app's UI won't.

As a start, I like to log every error encountered in the app and firmware. The logs would include any errors from Core Bluetooth or the BLE module in firmware. It also should consist of "out of spec" behavior, like encountering a value outside an enumeration or a default switch statement we don't expect to reach.

I've also never regretted logging every BLE interaction. You need it when encountering surprising behavior in your system because BLE logs help reconstruct a sequence of events or values leading to a bug.

In addition to logging, I've found it helpful to build simple tools into iOS apps to allow internal users to collect diagnostic and state data from all aspects of the system. The nature of these tools depends on your application but might include things like viewing state variables, querying values from connected peripherals, running stress tests, and so on.

TLDR

In short, every iOS + BLE project needs early and frequent integration of the entire system, automated software controls, modern software development processes, small units of work, and excellent tools for debugging and monitoring the system.